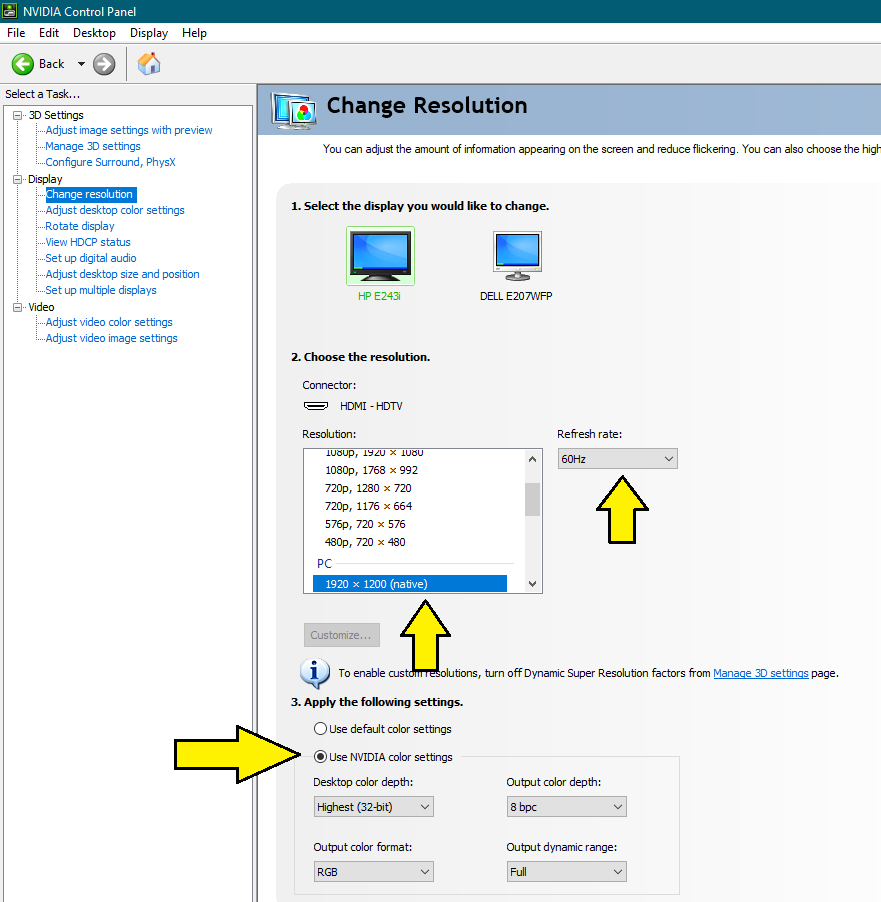

Heck, 8-bit might yield better result depending on how monitor handles 10-bit. Might be 10bit but might as well be 8-bit. In fact for color calibration dithering is still recommended because LUT's have 16-bit precision so it is best to enable dithering before calibration and have it enabled. It is possible to enable dithering on Nvidia and get better color precission. There is also no benefit from using 10-bit on today's LCD monitors because they always use 8-bit panels + A-FCR and dithering algorithms on GPU's are identical to A-FCR. Otherwise applications with 10-bit output should be actually dithered to 8-bit on 8-bit displays so no issues should happen there. Note: Without this reference mode windows or applications and more specifically games (especially old ones) can modify gamma and this will cause slight banding on 8-bit.

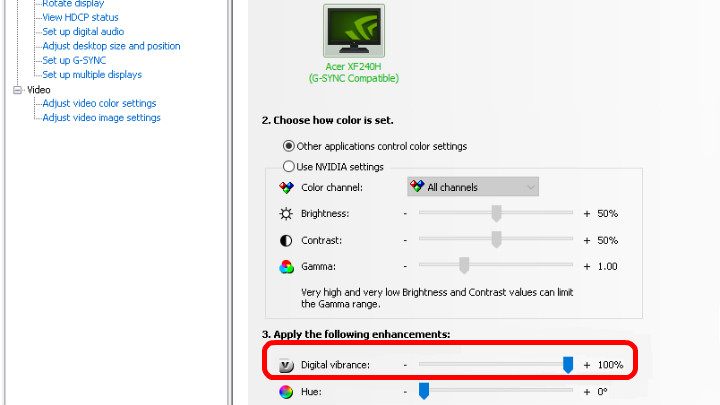

Otherwise colors need to be set to 50%/50%/1.00 for brightness/contrast/gamma and then there should be no banding. If you do not use any ICC profile and did not change any color setting in Nvidia control panel then you can check "Override to reference mode" under "Adjust desktop color settings" to prevent changes to video card LUT. Otherwise Windows desktop and wallpaper you use are 8-bit. Any modification of graphics card color lock up tables will result in reduced color gradation because you cannot do color correction of 8-bit desktop with 8-bit color precission. Click to expand.If you use Nvidia card this might happen because Nvidia despite having dithering capabilities doesn't enable them by default and these settings are buried deep inside windows registry.

0 kommentar(er)

0 kommentar(er)